A new Microsoft Security blog for your consideration.

URL: https://techcommunity.microsoft.com/t5/azure-sentinel/testing-the-new-version-of-the-windows-security-events-connector/ba-p/2483369

Overview:

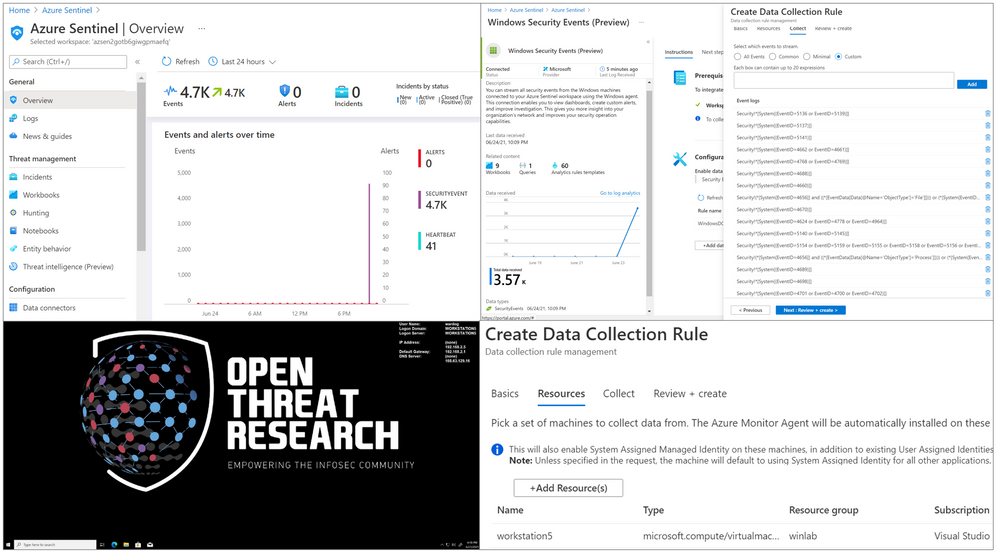

Last week, on Monday June 14th, 2021, a new

version of the Windows Security Events data connector reached public preview. This is

the first data connector created leveraging the new

generally available Azure Monitor Agent (AMA) and Data Collection Rules (DCR) features from the Azure

Monitor ecosystem. As any other new feature in Azure Sentinel, I

wanted to expedite the testing process and empower others in the InfoSec

community through a lab environment to learn more about it.

In this post, I will talk about the

new features of the new data connector and how to automate the

deployment of an Azure Sentinel instance with the

connector enabled, the creation and association of DCRs

and installation of the AMA on a Windows workstation. This is an

extension of a blog post I wrote, last year (2020), where I covered

the collection of Windows security events via the Log Analytics Agent (Legacy).

Recommended

Reading

I highly recommend reading the following blog posts

to learn more about the announcement of the new Azure Monitor

features and the Windows Security Events data connector:

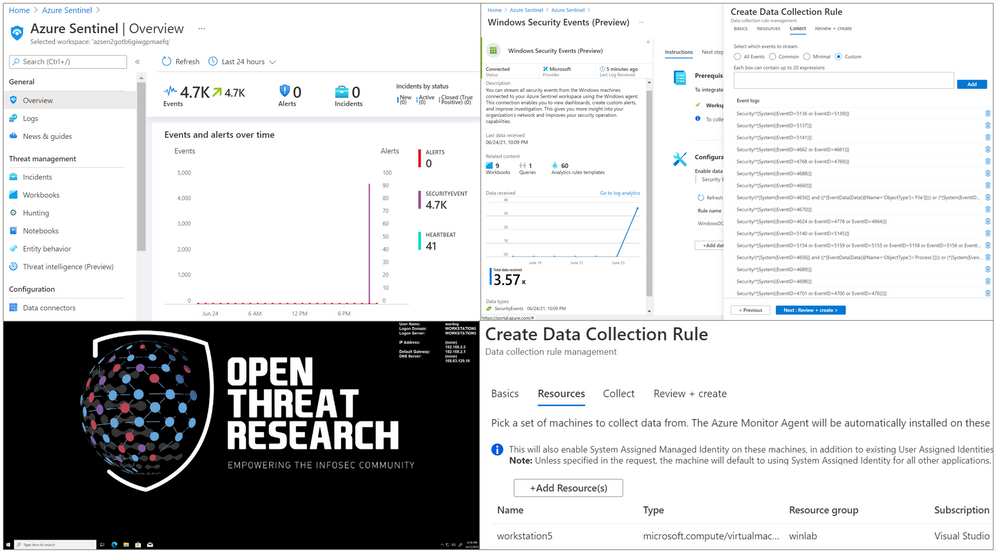

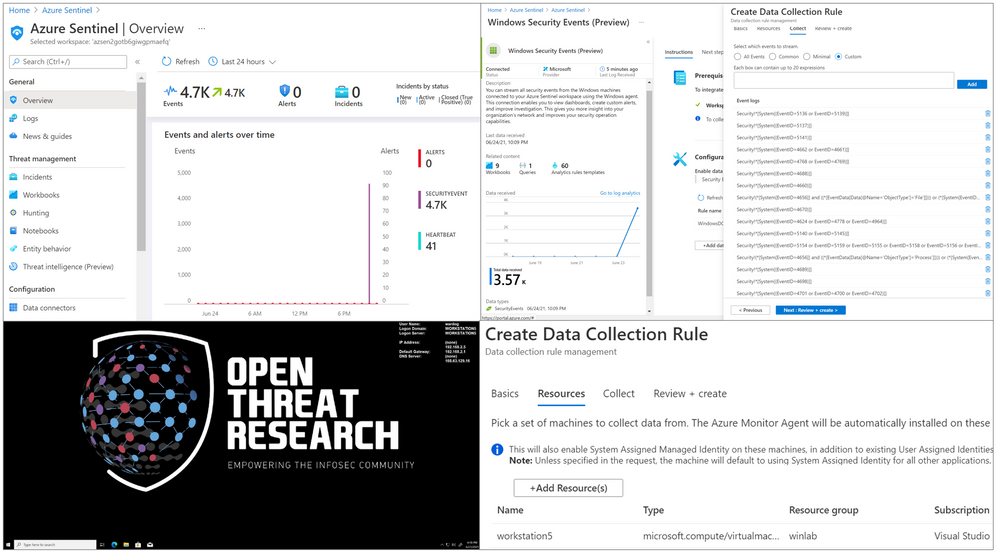

Azure Sentinel To-Go!?

Azure Sentinel2Go is an open-source project maintained

and developed by the Open Threat Research community to automate the deployment of an Azure

Sentinel research lab and a data ingestion pipeline to consume

pre-recorded datasets. Every environment I release through this

initiative is an environment I use and test while performing research as part

of my role in the MSTIC R&D team. Therefore, I am constantly trying to

improve the deployment templates as I cover more scenarios. Feedback

is greatly appreciated.

A

New Version of the Windows Security Events Connector?

According to Microsoft docs, the Windows Security Events connector lets you stream

security events from any Windows server (physical or virtual, on-premises or in

any cloud) connected to your Azure Sentinel workspace. After last week,

there are now two versions of this connector:

- Security

events (legacy version):

Based on the Log Analytics Agent (Usually known as the Microsoft

Monitoring Agent (MMA) or Operations Management Suite

(OMS) agent).

- Windows

Security Events (new version):

Based on the new Azure Monitor Agent (AMA).

In your Azure Sentinel data connector’s view, you

can now see both connectors:

A

New Version? What is New?

Data

Connector Deployment

Besides using the Log Analytics Agent to

collect and ship events, the old connector uses the Data Sources resource from the Log Analytics Workspace resource to set the collection tier

of Windows security events.

The new connector, on the other hand, uses a combination of Data Connection Rules (DCR) and Data Connector

Rules Association (DCRA).

DCRs define what data to collect and where it should be

sent. Here is where we can set it to send data to the log analytics

workspace backing up our Azure Sentinel instance.

In order to apply a DCR to a virtual machine, one

needs to create an association between the machine and the rule. A

virtual machine may have an association with multiple DCRs, and a DCR

may have multiple virtual machines associated with it.

For more detailed information about

setting up the Windows Security Events connector with both Log Analytics Agent

and Azure Monitor Agents manually,

take a look at this document.

Data

Collection Filtering Capabilities

The old connector is not flexible enough to

choose what specific events to collect. For example, these are the

only options to collect data from Windows machines with the old connector:

All events –

All Windows security and AppLocker events.

- Common –

A standard set of events for auditing purposes. The Common event set

may contain some types of events that aren’t so common. This is because

the main point of the Common set is to reduce the volume of events to a

more manageable level, while still maintaining full audit trail capability.

- Minimal –

A small set of events that might indicate potential threats. This set does

not contain a full audit trail. It covers only events that might indicate

a successful breach, and other important events that have very low rates

of occurrence.

- None –

No security or AppLocker events. (This setting is used to disable the

connector.)

According to Microsoft docs, these are

the pre-defined security event collection groups

depending on the tier set:

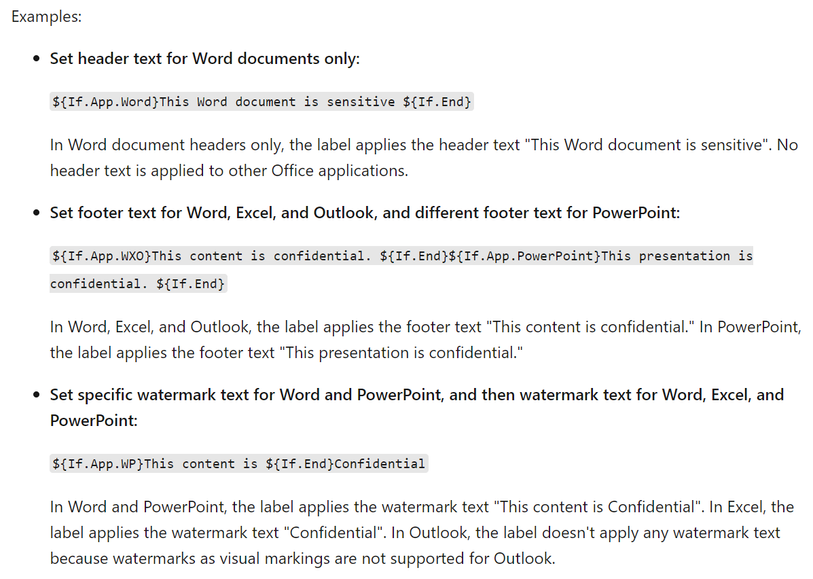

On the other hand, the new connector allows custom data collection via XPath queries. These

XPath queries

are defined during the creation of the data collection rule and

are written in the form of LogName!XPathQuery. Here

are a few examples:

- Collect only Security events with Event ID = 4624

Security!*[System[(EventID=4624)]]

- Collect only Security events with Event ID = 4624 or

Security Events with Event ID = 4688

Security!*[System[(EventID=4624 or EventID=4688)]]

- Collect only Security events with Event ID = 4688 and

with a process name of consent.exe.

Security!*[System[(EventID=4688)]] and *[EventData[Data[@Name=’ProcessName’]

=’C:WindowsSystem32consent.exe’]]

You can select the custom

option to select which events to stream:

Important!

Based on the new connector docs, make sure to query only Windows Security and AppLocker

logs. Events from other Windows logs, or from security logs from other

environments, may not adhere to the Windows Security Events schema and won’t be

parsed properly, in which case they won’t be ingested to your workspace.

Also, the Azure Monitor agent supports XPath

queries for XPath version 1.0 only. I recommend reading the Xpath 1.0 Limitation documentation before writing XPath Queries.

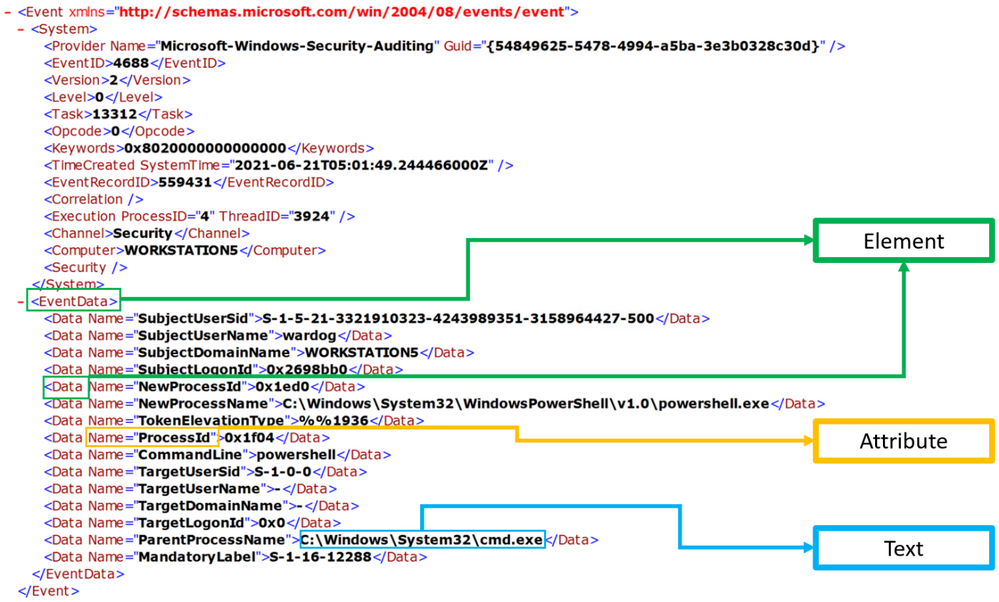

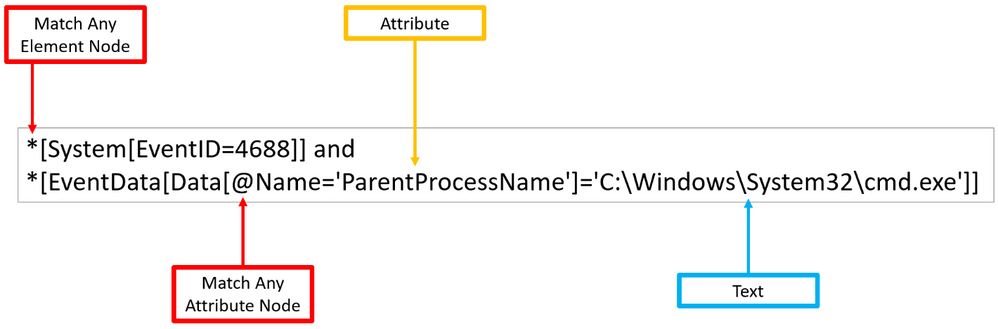

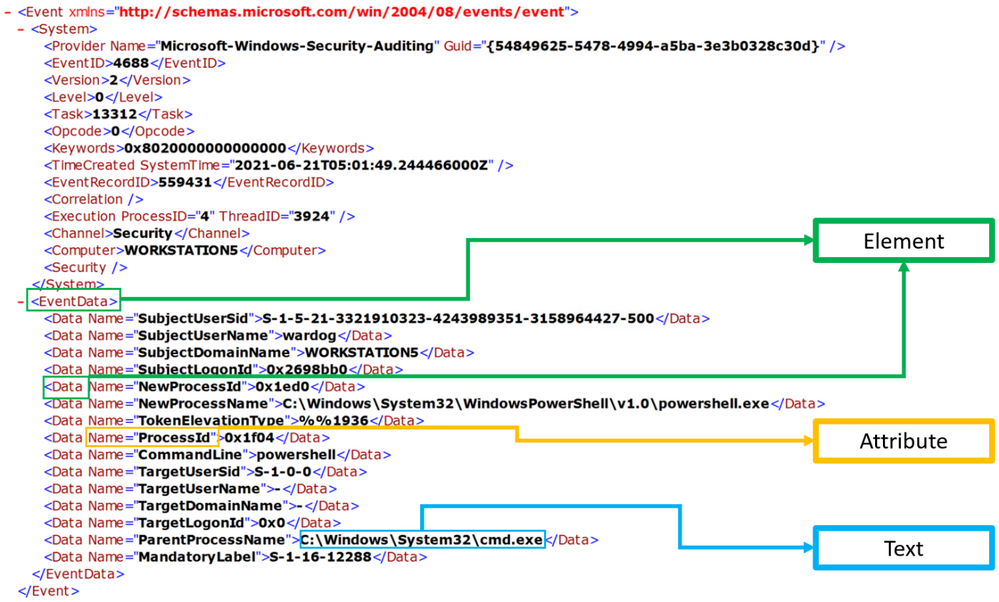

XPath?

XPath stands for XML (Extensible

Markup Language) Path language, and it is used to

explore and model XML documents as a tree of nodes. Nodes can be

represented as elements,

attributes, and

text.

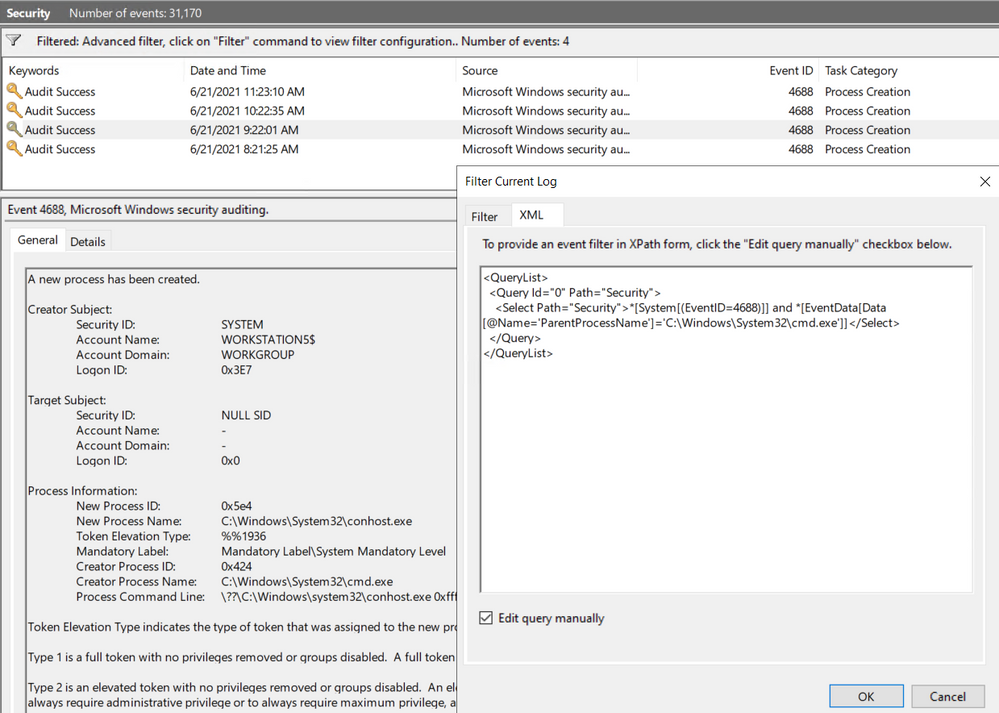

In the image below, we can see a few node examples

in the XML representation of a Windows security event:

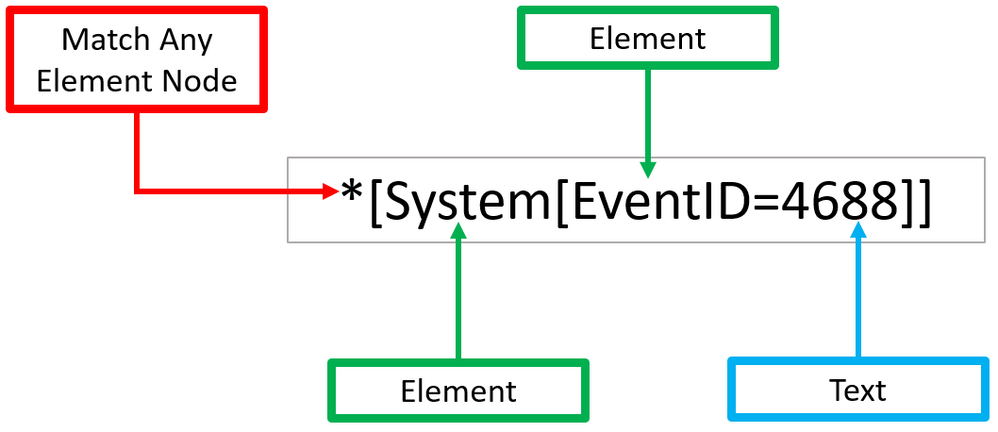

XPath Queries?

XPath queries are used to search for patterns in XML

documents and leverage path expressions and predicates

to find a node or filter specific nodes that contain a specific

value. Wildcards such as ‘*’

and ‘@’

are used to select nodes and predicates are always embedded in square brackets “[]”.

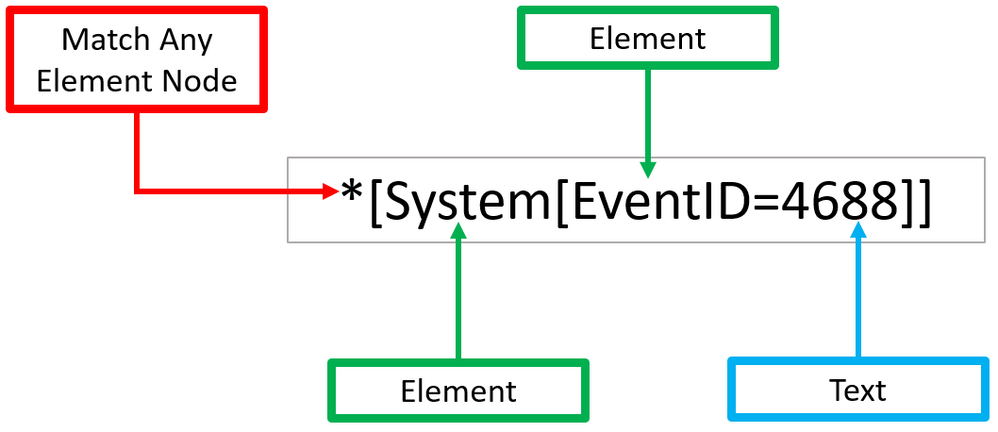

Matching any element node with ‘*’

Using our previous Windows Security event

XML example, we can process Windows Security events using the

wildcard ‘*’ at

the `Element` node level.

The example below walks

through two ‘Element’ nodes

to get to the ‘Text’

node of value ‘4688’.

You can test this basic ‘XPath’ query via

PowerShell.

- Open a PowerShell console as ‘Administrator’.

- Use the Get-WinEvent command to pass the XPath query.

- Use the ‘Logname’ parameter to define what event

channel to run the query against.

- Use the ‘FilterXPath’ parameter to set the XPath query.

Get-WinEvent -LogName Security -FilterXPath ‘*[System[EventID=4688]]

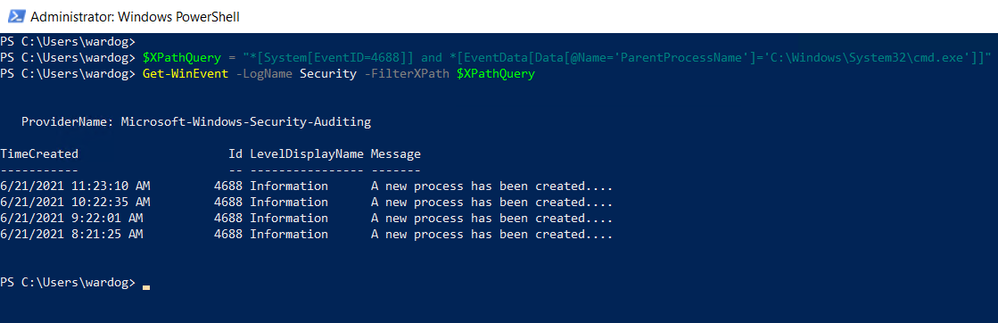

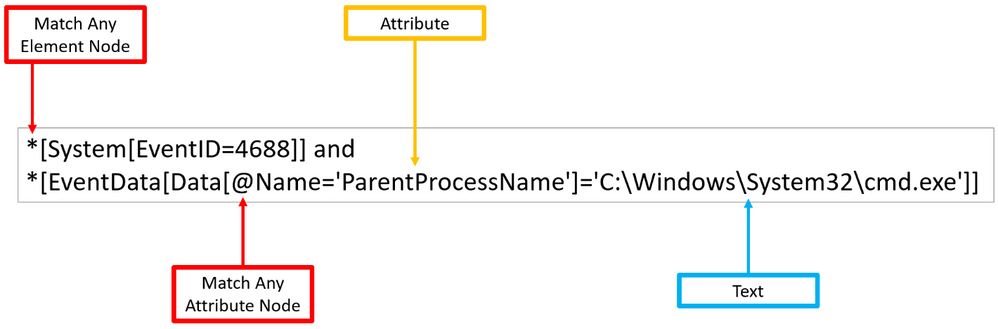

Matching any attribute node with ‘@’

As shown before, ‘Element’ nodes can contain ‘Attributes’ and we can

use the wildcard ‘@’

to search for ‘Text’

nodes at the ‘Attribute’

node level. The example below extends the

previous one and adds a filter to search for a specific ‘Attribute’ node that

contains the following text: ‘C:WindowsSystem32cmd.exe’.

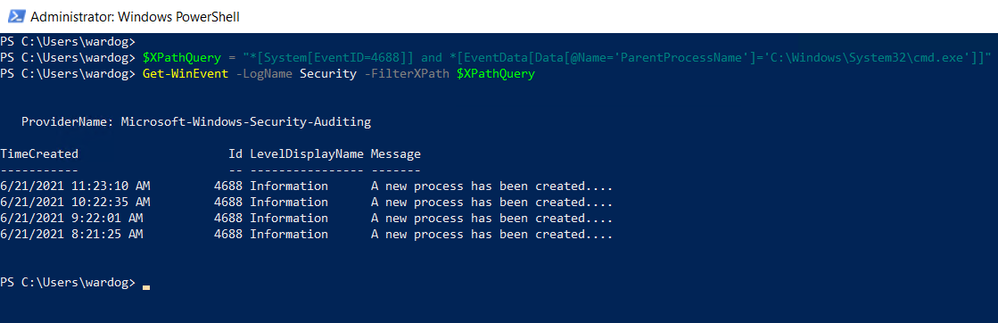

Once again, you can test the XPath query via

PowerShell as Administrator.

$XPathQuery = “*[System[EventID=4688]] and *[EventData[Data[@Name=’ParentProcessName’]=’C:WindowsSystem32cmd.exe’]]”

Get-WinEvent -LogName Security -FilterXPath $XPathQuery

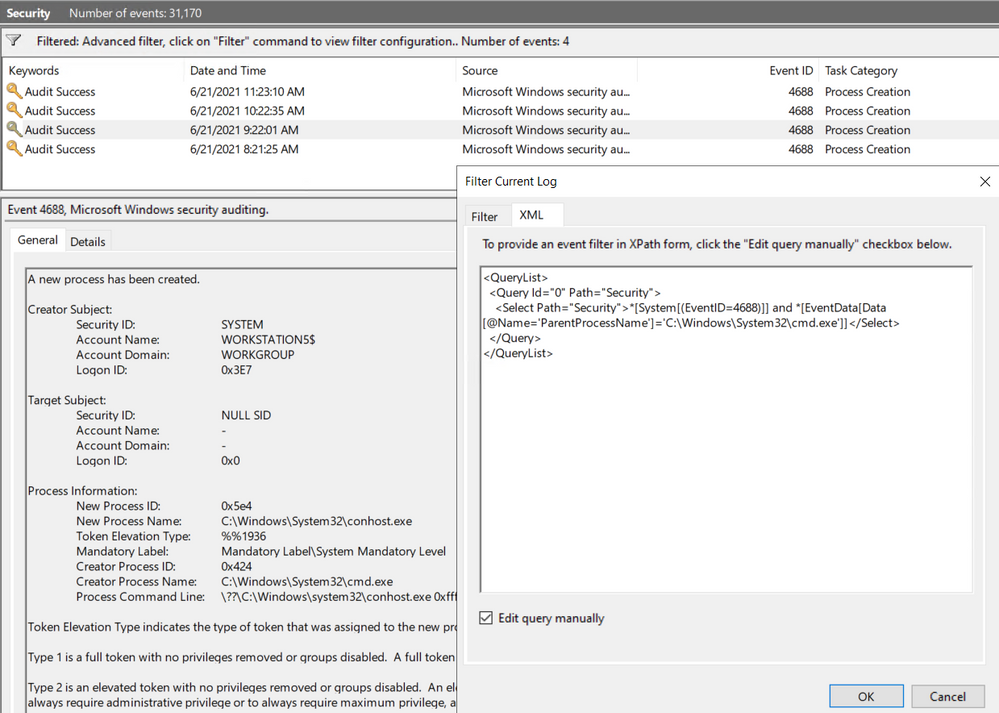

Can I Use XPath Queries in Event Viewer?

Every time you add a filter through the Event

Viewer UI, you can also get to the XPath query representation of the

filter. The XPath query is part of a QueryList node which

allows you to define and run multiple queries at once.

We can take our previous example where we searched

for a specific attribute and run it through the Event Viewer Filter

XML UI.

<QueryList>

<Query Id="0" Path="Security">

<Select Path="Security">*[System[(EventID=4688)]] and *[EventData[Data

[@Name='ParentProcessName']='C:WindowsSystem32cmd.exe']]</Select>

</Query>

</QueryList>

Now that we have covered some of the main changes

and features of the new version of the Windows Security Events data connector,

it is time to show you how to create a lab environment for you to test your own

XPath queries for research purposes and before pushing

them to production.

Deploy Lab Environment

- Identify the right Azure resources

to deploy.

- Create deployment template.

- Run deployment template.

Identify the Right Azure Resources to Deploy

As mentioned earlier in this post, the old connector uses the Data Sources resource from the Log Analytics Workspace resource to set the collection tier of Windows

security events.

This is the Azure Resource Manager (ARM) template I

use in Azure-Sentinel2Go to set it up:

Azure-Sentinel2Go/securityEvents.json

at master · OTRF/Azure-Sentinel2Go (github.com)

Data

Sources Azure Resource

{

"type": "Microsoft.OperationalInsights/workspaces/dataSources",

"apiVersion": "2020-03-01-preview",

"location": "eastus",

"name": "WORKSPACE/SecurityInsightsSecurityEventCollectionConfiguration",

"kind": "SecurityInsightsSecurityEventCollectionConfiguration",

"properties": {

"tier": "All",

"tierSetMethod": "Custom"

}

}

However, the new connector uses a combination of Data Connection Rules (DCR) and Data Connector Rules Association (DCRA).

This is the ARM template I use to

create data collection rules:

Azure-Sentinel2Go/creation-azureresource.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

Data

Collection Rules Azure Resource

{

"type": "microsoft.insights/dataCollectionRules",

"apiVersion": "2019-11-01-preview",

"name": "WindowsDCR",

"location": "eastus",

"tags": {

"createdBy": "Sentinel"

},

"properties": {

"dataSources": {

"windowsEventLogs": [

{

"name": "eventLogsDataSource",

"scheduledTransferPeriod": "PT5M",

"streams": [

"Microsoft-SecurityEvent"

],

"xPathQueries": [

"Security!*[System[(EventID=4624)]]"

]

}

]

},

"destinations": {

"logAnalytics": [

{

"name": "SecurityEvent",

"workspaceId": "AZURE-SENTINEL-WORKSPACEID",

"workspaceResourceId": "AZURE-SENTINEL-WORKSPACERESOURCEID"

}

]

},

"dataFlows": [

{

"streams": [

"Microsoft-SecurityEvent"

],

"destinations": [

"SecurityEvent"

]

}

]

}

}

One additional step in the setup of the new

connector is the association of the DCR with Virtual Machines.

This is the ARM template I use

to create DCRAs:

Azure-Sentinel2Go/association.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

Data

Collection Rule Associations Azure Resource

{

"name": "WORKSTATION5/microsoft.insights/WindowsDCR",

"type": "Microsoft.Compute/virtualMachines/providers/dataCollectionRuleAssociations",

"apiVersion": "2019-11-01-preview",

"location": "eastus",

"properties": {

"description": "Association of data collection rule. Deleting this association will break the data collection for this virtual machine.",

"dataCollectionRuleId": "DATACOLLECTIONRULEID"

}

}

What

about the XPath Queries?

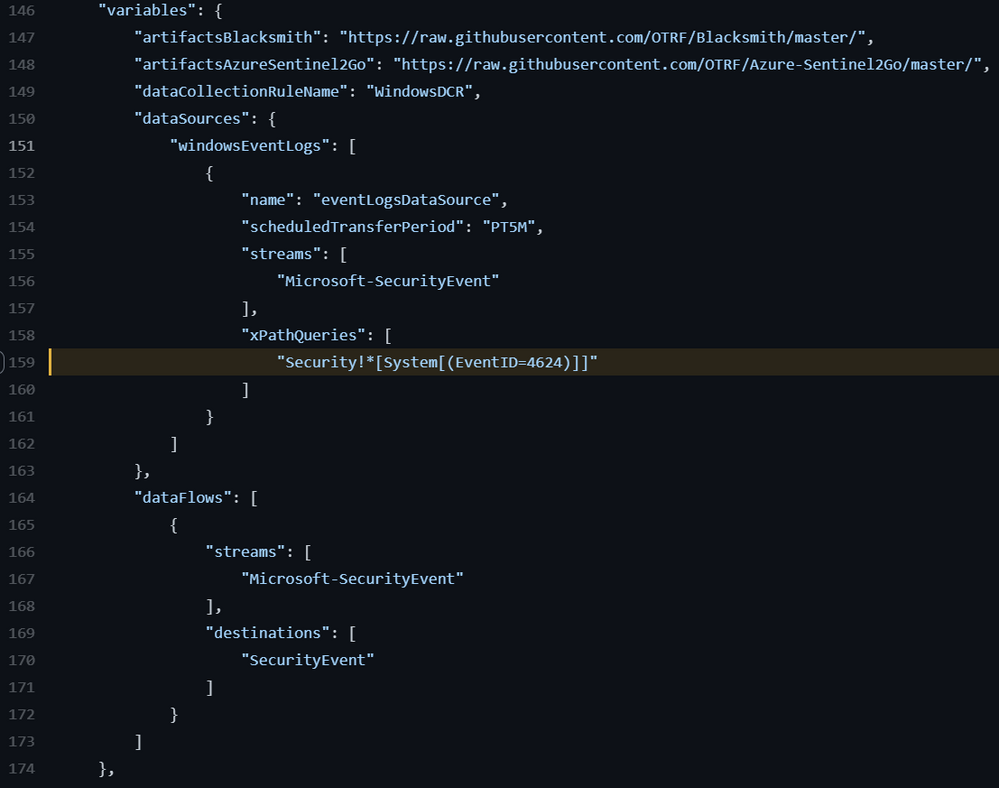

As shown in the previous section, the XPath query

is part of the “dataSources”

section of the data collection rule resource. It is defined under the ‘windowsEventLogs’ data

source type.

"dataSources": {

"windowsEventLogs": [

{

"name": "eventLogsDataSource",

"scheduledTransferPeriod": "PT5M",

"streams": [

"Microsoft-SecurityEvent"

],

"xPathQueries": [

"Security!*[System[(EventID=4624)]]"

]

}

]

}

Create Deployment Template

We can easily add all those

ARM templates to an ‘Azure Sentinel & Win10 Workstation’ basic template. We just need to make

sure we install the Azure

Monitor Agent instead of the Log

Analytics one, and enable the system-assigned managed identity in the Azure VM.

Template

Resource List to Deploy:

- Azure Sentinel Instance

- Windows Virtual Machine

- Azure Monitor Agent Installed.

- System-assigned managed identity Enabled.

- Data Collection Rule

- Log Analytics Workspace ID

- Log Analytics Workspace Resource ID

- Data Collection Rule Association

- Data Collection Rule ID

- Windows Virtual Machine Resource Name

The following ARM template can be used for our

first basic scenario:

Azure-Sentinel2Go/Win10-DCR-AzureResource.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

Run Deployment Template

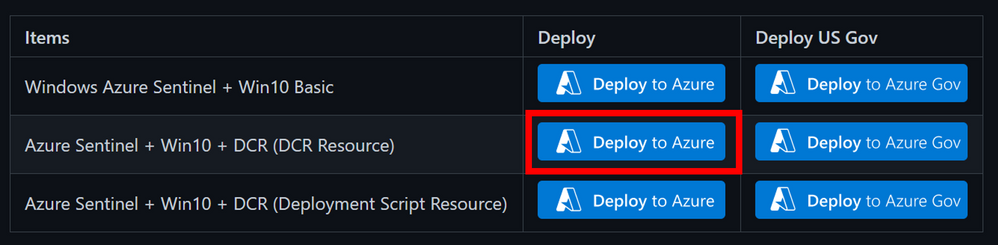

You can deploy the ARM template via a “Deploy to Azure” button or via Azure CLI.

“Deploy to Azure” Button

- Browse

to Azure Sentinel2Go repository

- Go

to grocery-list/Win10/demos.

- Click

on the “Deploy to

Azure” button next to “Azure Sentinel + Win10 + DCR (DCR Resource)”

- Fill

out the required parameters:

- adminUsername:

admin user to create in the Windows workstation.

- adminPassword:

password for admin user.

- allowedIPAddresses:

Public IP address to restrict access to the lab environment.

- Wait

5-10 mins and your environment should be ready.

Azure CLI

- Download demo template.

- Open a terminal where

you can run Azure CLI from (i.e. PowerShell).

- Log in to your Azure

Tenant locally.

az login

- Create Resource Group

(Optional)

az group create -n AzSentinelDemo -l eastus

- Deploy ARM template

locally.

az deployment group create –f ./ Win10-DCR-AzureResource.json -g MYRESOURCRGROUP –adminUsername MYUSER –adminPassword MYUSERPASSWORD –allowedIPAddresses x.x.x.x

- Wait 5-10 mins and your

environment should be ready.

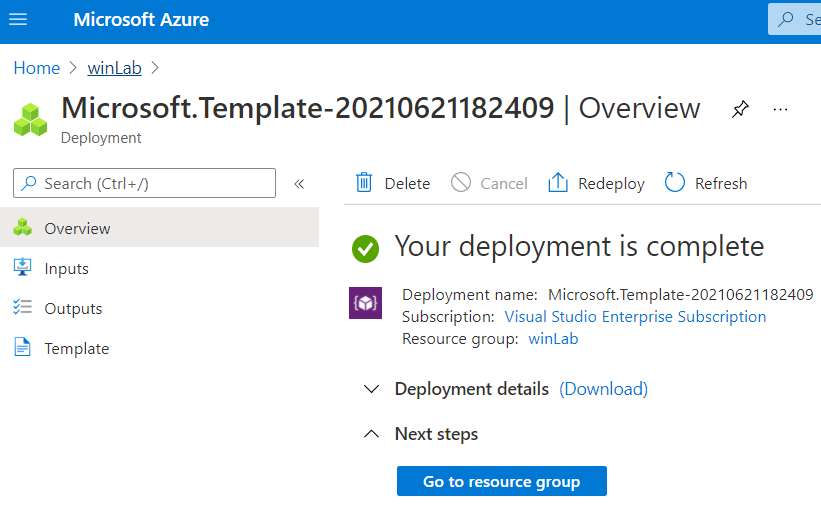

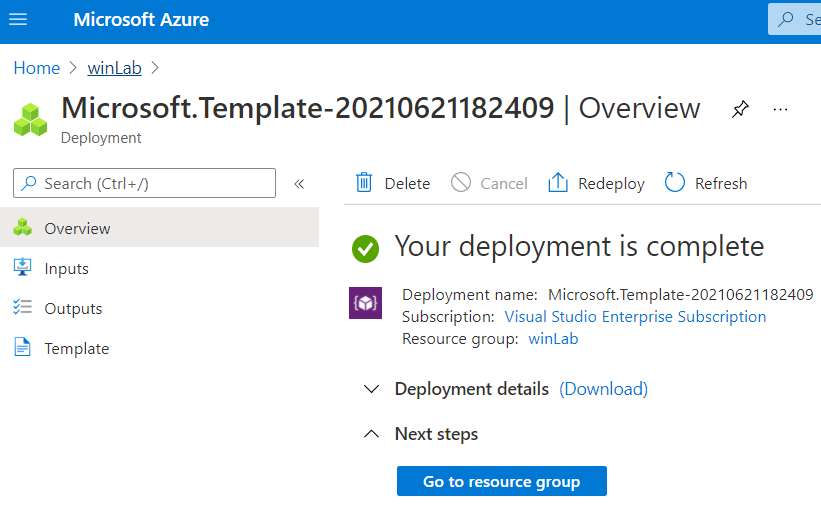

Whether you use the UI or the CLI, you can monitor

your deployment by going to Resource Group > Deployments:

Verify

Lab Resources

Once your environment is deployed successfully, I

recommend verifying every resource that was deployed.

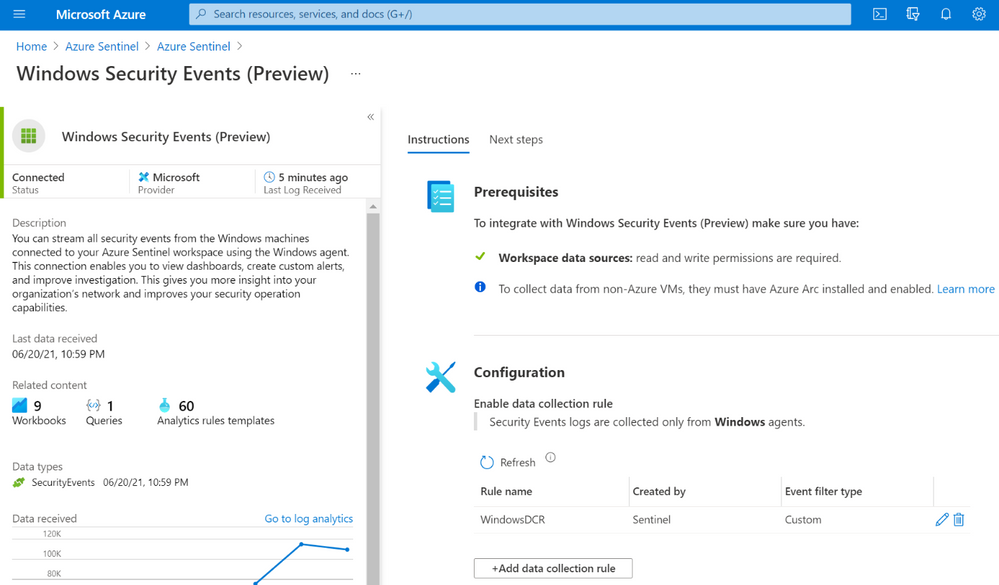

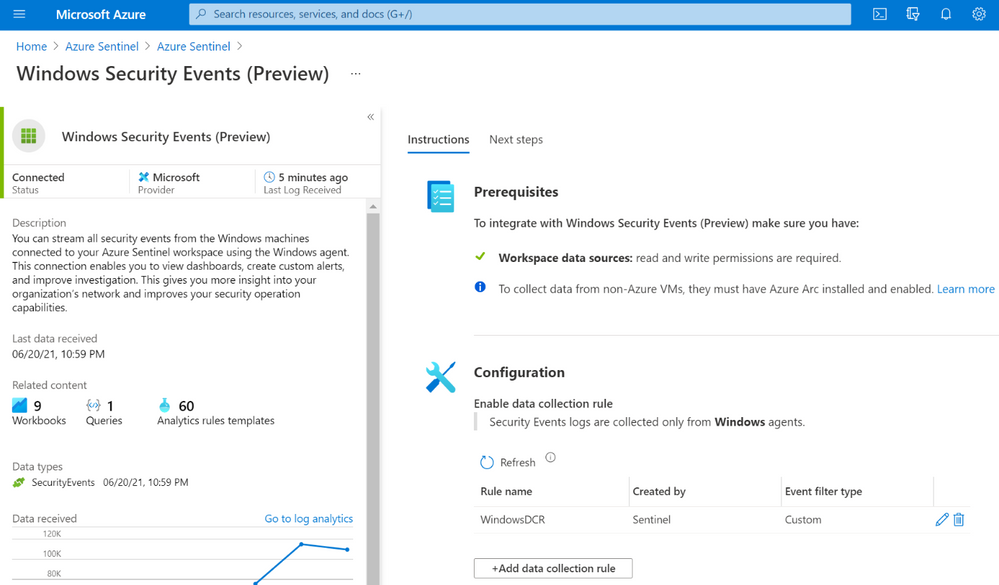

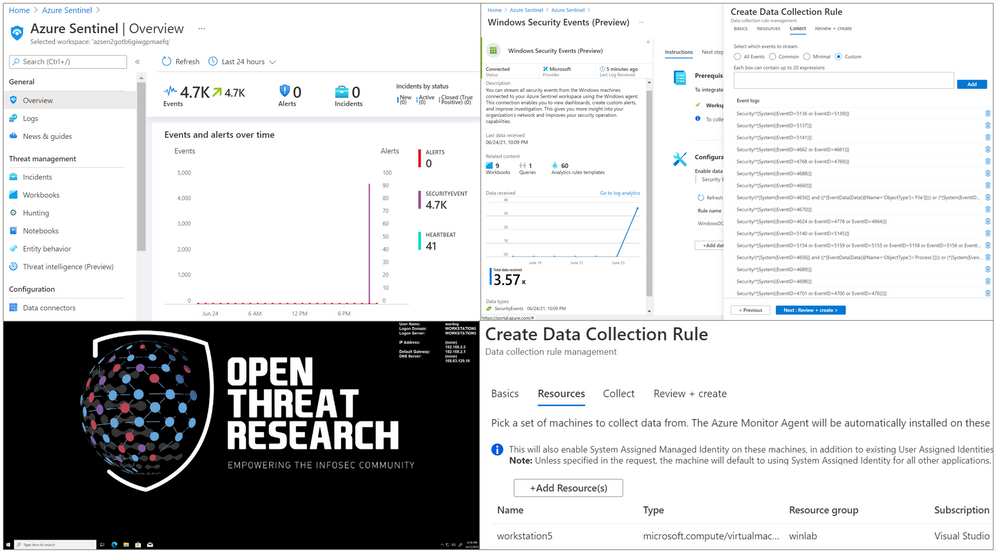

Azure

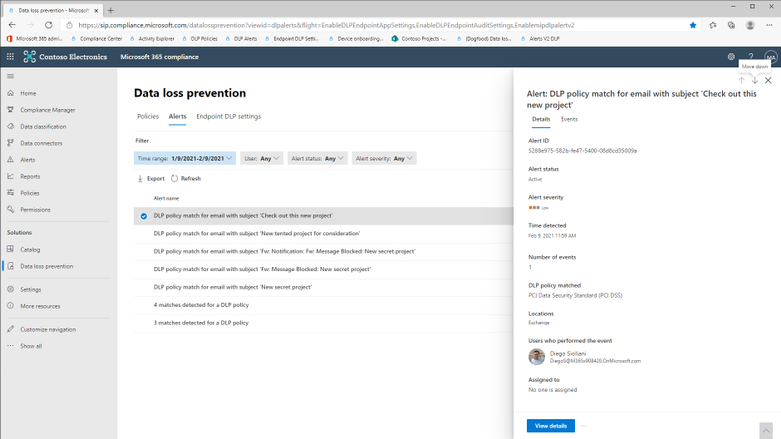

Sentinel New Data Connector

You will see the Windows Security Events (Preview) data

connector enabled with a custom Data

Collection Rules (DCR):

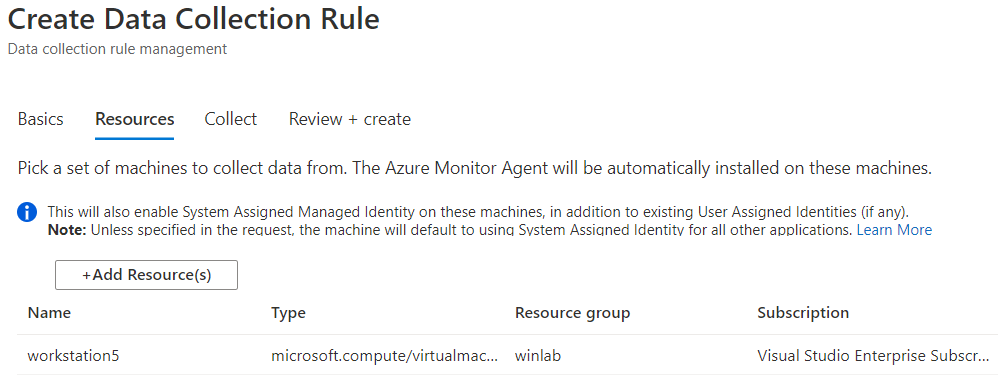

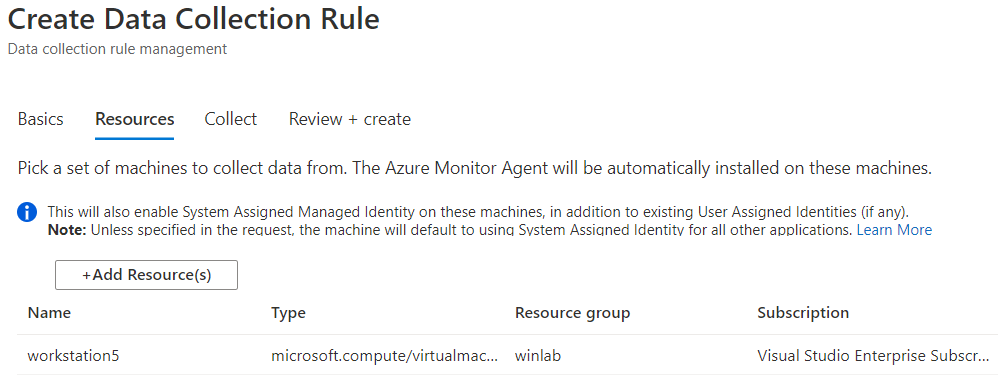

If you edit the custom DCR, you will see the XPath

query and the resource that it got associated with. The image below shows the

association of the DCR with a machine named workstation5.

You can also see that the data collection is set to custom and, for this

example, we only set the event stream to collect events with Event ID 4624.

Windows Workstation

I recommend to RDP to the Windows Workstation by

using its Public IP Address. Go to your resource group and select the Azure VM.

You should see the public IP address to the right of the screen.

This would generate authentication events which will be captured by

the custom DCR associated with the endpoint.

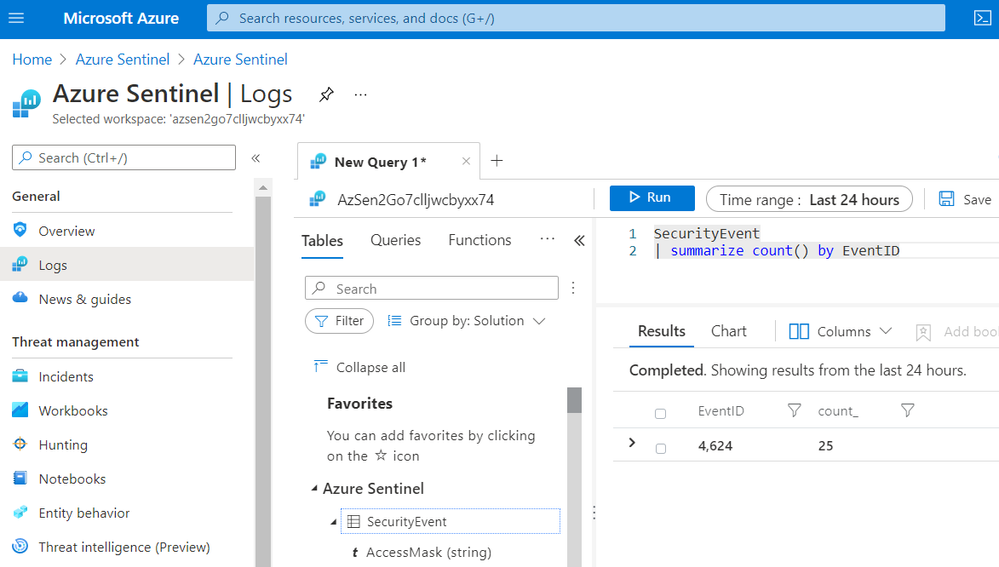

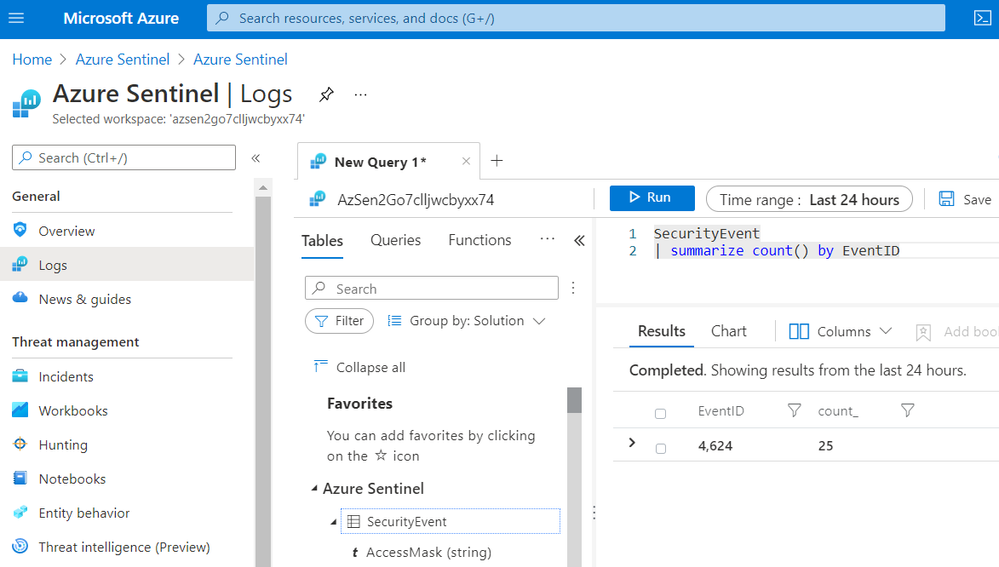

Check Azure Sentinel Logs

Go back to your Azure Sentinel, and you should

start seeing some events on the Overview page:

Go to Logs

and run the following KQL query:

SecurityEvent

| summarize count() by EventID

As you can see in the image below, only events with

Event ID 4624 were collected by the Azure Monitor Agent.

You might be asking yourself, “Who would only want

to collect events with Event ID 4624 from a Windows endpoint?”.

Believe it or not, there are network environments where due to bandwidth

constraints, they can only collect certain events. Therefore, this custom

filtering capability is amazing and very useful to cover more use cases and

even save storage!

Any Good XPath Queries Repositories in the InfoSec

Community?

Now that we know the internals of the new connector

and how to deploy a simple lab environment, we can test multiple XPath queries

depending on your organization and research use cases and bandwidth

constraints. There are a few projects that you can use.

Palantir WEF Subscriptions

One of many repositories out there that

contain XPath queries is the ‘windows-event-forwarding’ project from Palantir. The XPath queries are Inside of the Windows

Event Forwarding (WEF) subscriptions. We could take all the subscriptions

and parse them programmatically to extract all the XPath

queries saving them in a format that can be used to be part of the

automatic deployment.

You can run the following steps in this document

available in Azure Sentinel To-go and extract XPath queries from the Palantir

project.

Azure-Sentinel2Go/README.md at master · OTRF/Azure-Sentinel2Go

(github.com)

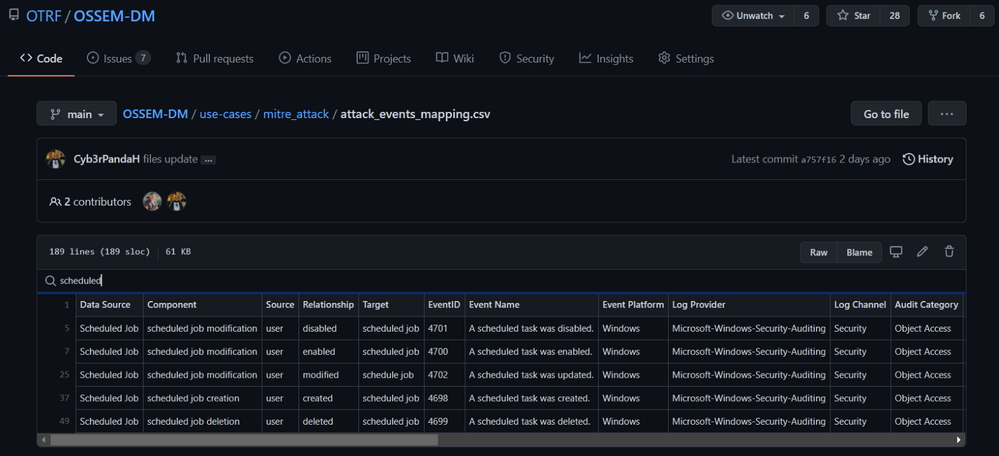

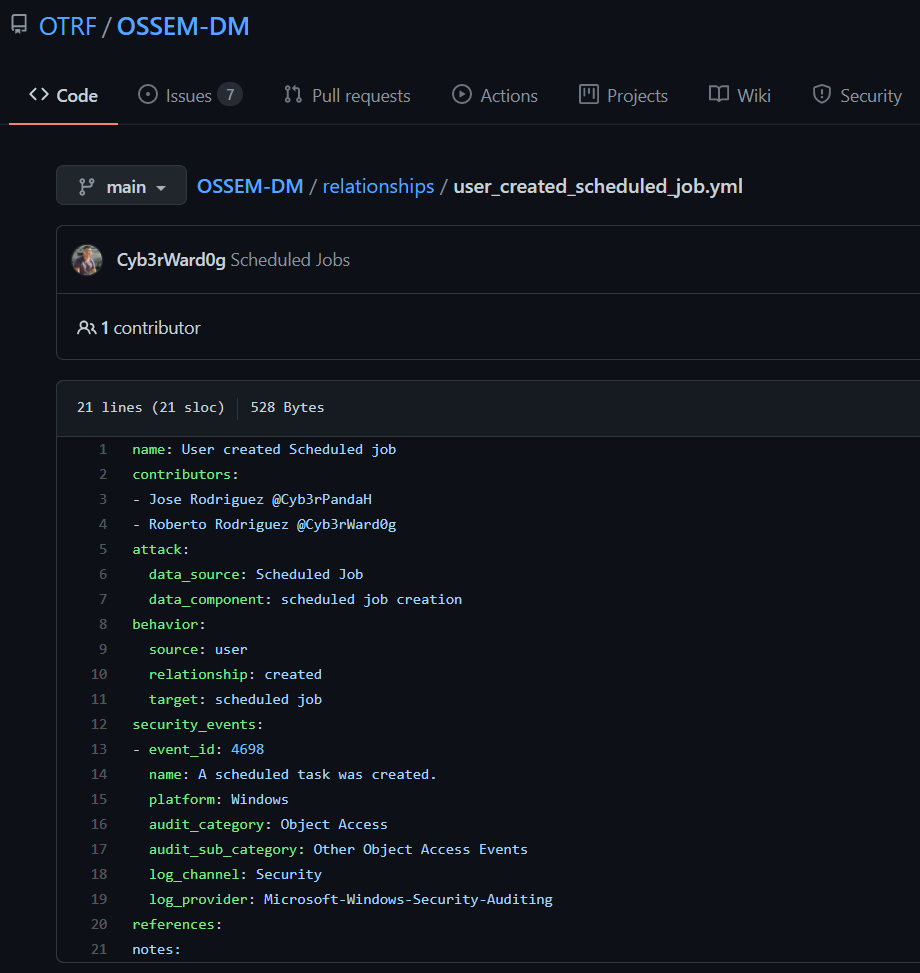

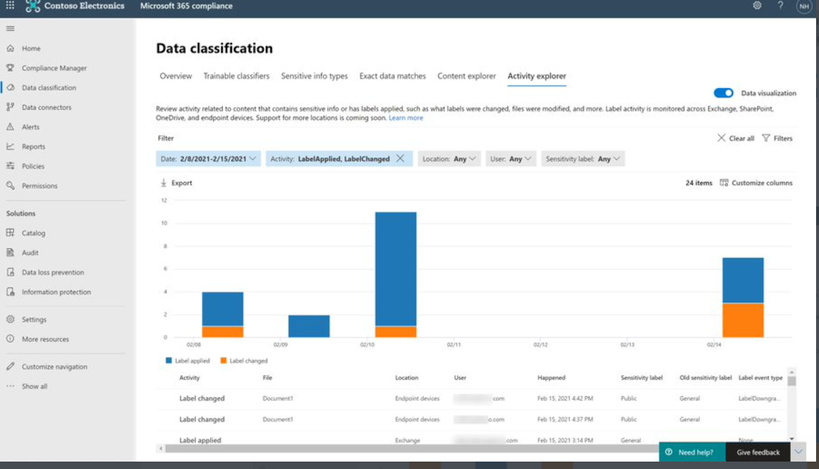

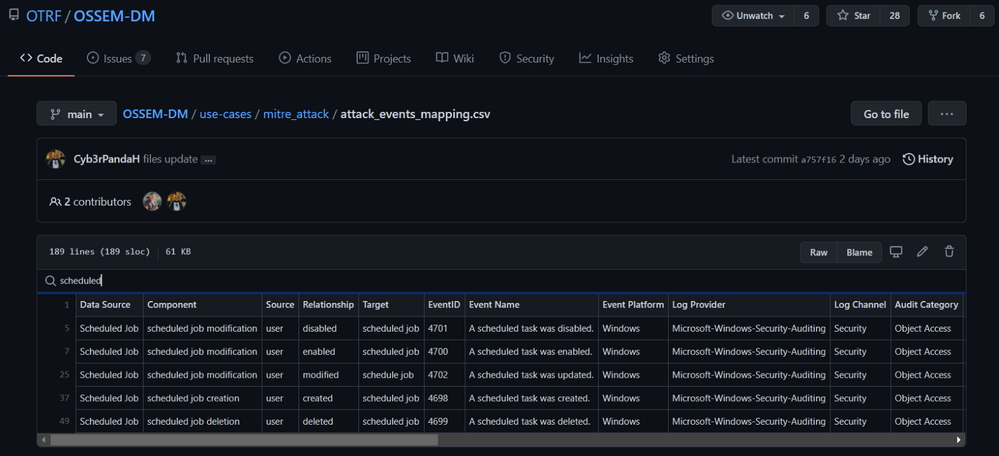

OSSEM Detection Model + ATT&CK Data Sources

From a community perspective, another great

resource you can use to extract XPath Queries from is the Open

Source Security Event Metadata (OSSEM) Detection Model (DM) project. A community driven effort to help

researchers model attack behaviors from a data perspective and

share relationships identified in security events across several operating

systems.

One of the use cases from this initiative

is to map all security events in the project to the new ‘Data Sources’ objects provided by the MITRE ATT&CK

framework. In the image below, we can

see how the OSSEM DM project provides an interactive document (.CSV) for researchers to explore

the mappings (Research output):

One of the advantages of this project over others is

that all its data relationships are in YAML format which makes

it easy to translate to others formats. For example, XML. We can use

the Event IDs defined in each data

relationship documented in OSSEM DM and create XML files with XPath queries

in them.

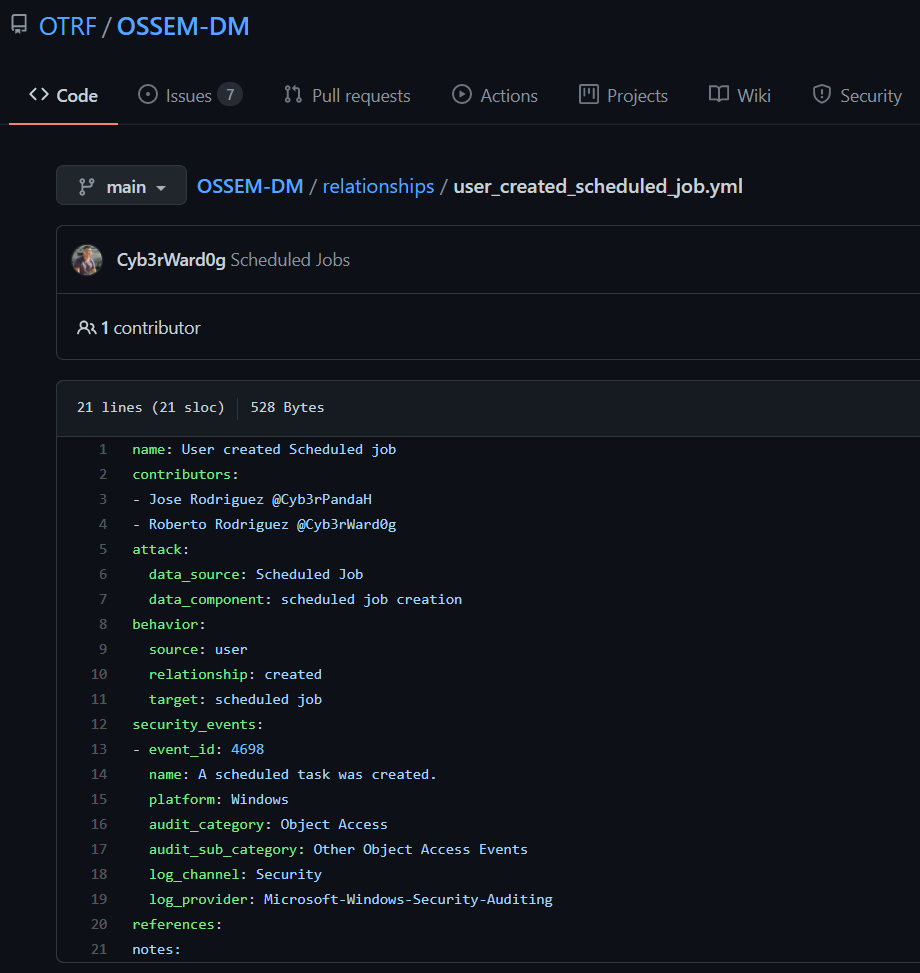

Exploring

OSSEM DM Relationships (YAML Files)

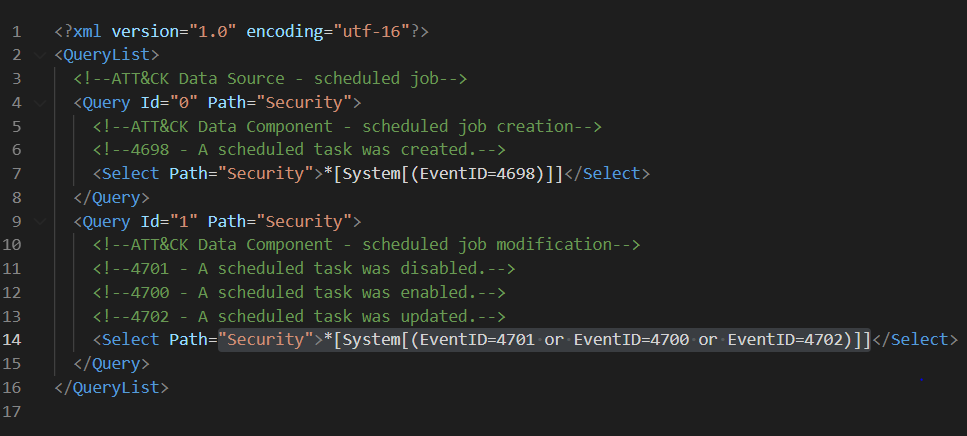

Let’s say we want to use relationships related to scheduled jobs in Windows.

Translate

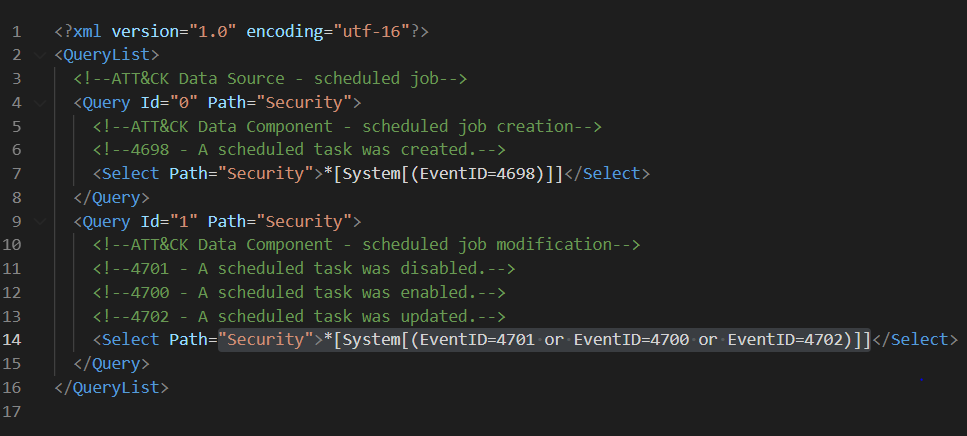

YAML files to XML Query Lists

We can process all the YAML files and export the

data in an XML files. One thing that I like about this

OSSEM DM use case is that we can group the XML files by ATT&CK data sources. This can help organizations

organize their data collection in a way that can be mapped to detections or

other ATT&CK based frameworks internally.

We can use the QueryList format to document all ‘scheduled jobs relationships‘

XPath queries in one XML file.

I like to document my XPath

queries first in this format because it expedites the validation

process of the XPath queries locally on a Windows endpoint. You can use that

XML file in a PowerShell command to query Windows Security events and make

sure there are not syntax issues:

[xml]$scheduledjobs = get-content .scheduled-job.xml

Get-WinEvent -FilterXml $scheduledjobs

Translate

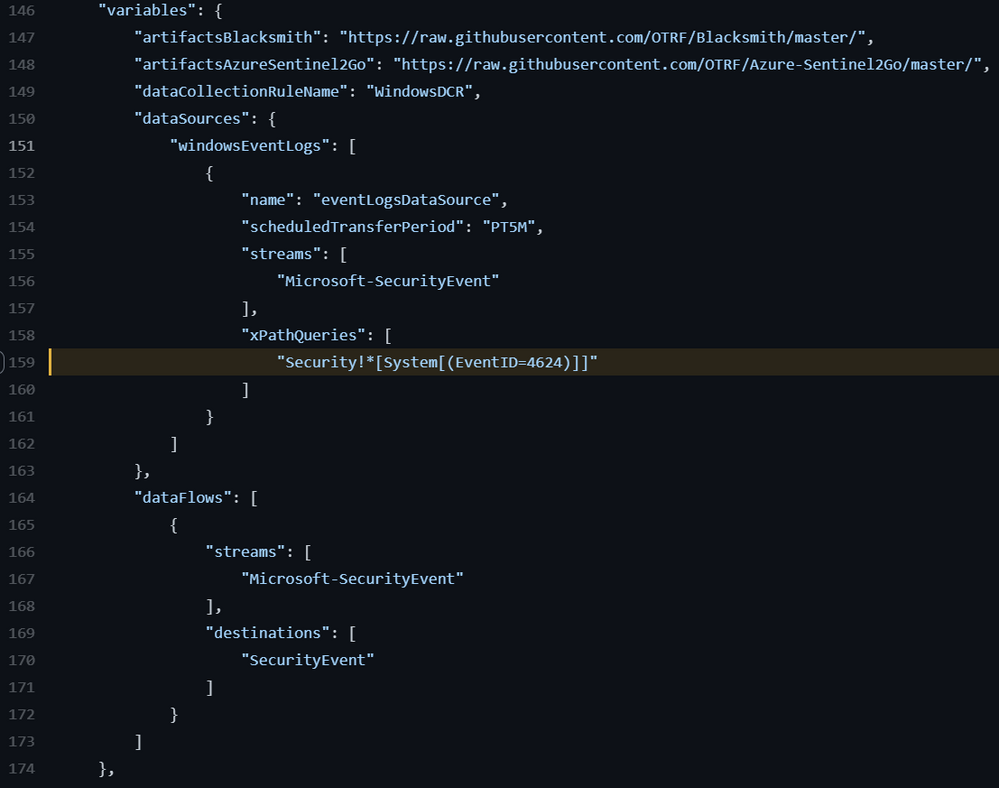

XML Query Lists to DCR Data Source:

Finally, once the XPath queries have been

validated, we could simply extract them from the XML files and put them in a

format that could be used in ARM templates to create DCRs. Do you

remember the dataSources property

of the DCR Azure resource we talked about earlier? What if we could get

the values of the windowsEventLogs data

source directly from a file instead of hardcoding them in an ARM template? The

example below is how it was previously being hardcoded.

"dataSources": {

"windowsEventLogs": [

{

"name": "eventLogsDataSource",

"scheduledTransferPeriod": "PT5M",

"streams": [

"Microsoft-SecurityEvent"

],

"xPathQueries": [

"Security!*[System[(EventID=4624)]]"

]

}

]

}

We could use the XML files created after processing

OSSEM DM relationships mapped to ATT&CK data sources and creating

the following document. We can pass the URL of the document as a parameter in

an ARM template to deploy our lab environment:

Azure-Sentinel2Go/ossem-attack.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

Wait!

How Do You Create the Document?

The OSSEM team is contributing and

maintaining the JSON file from the previous section in

the Azure Sentinel2Go repository. However, if you want to

go through the whole process on your own, Jose Rodriguez (@Cyb3rpandah) was kind enough to write every single

step to get to that output file in the following blog post:

OSSEM Detection Model: Leveraging Data Relationships to

Generate Windows Event XPath Queries (openthreatresearch.com)

Ok, But, How Do I Pass the JSON

file to our Initial ARM template?

In our initial ARM template, we had the XPath query as an

ARM template variable as shown in the image below.

We could also have it as a template parameter.

However, it is not

flexible enough to define multiple DCRs or even update the whole DCR Data

Source object (Think about future coverage beyond Windows

logs).

Data Collection Rules – CREATE API

For more complex use cases, I would use the DCR Create API. This can be executed via a PowerShell script

which can also be used inside of an ARM template via deployment scripts. Keep in mind that, the deployment script resource requires

an identity to execute the script. This managed identity of type user-assigned can be created at

deployment time and used to create the DCRs programmatically.

PowerShell Script

If you have an Azure Sentinel instance without the

data connector enabled, you can use the following PowerShell script to

create DCRs in it. This is good for testing and it also works in ARM templates.

Keep in mind, that you would need to have a

file where you can define the structure of the windowsEventLogs data source object

used in the creation of DCRs. We created that in the previous section remember?

Here is where we can use the OSSEM Detection

Model XPath Queries File 😉

Azure-Sentinel2Go/ossem-attack.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

FileExample.json

{

"windowsEventLogs": [

{

"Name": "eventLogsDataSource",

"scheduledTransferPeriod": "PT1M",

"streams": [

"Microsoft-SecurityEvent"

],

"xPathQueries": [

"Security!*[System[(EventID=5141)]]",

"Security!*[System[(EventID=5137)]]",

"Security!*[System[(EventID=5136 or EventID=5139)]]",

"Security!*[System[(EventID=4688)]]",

"Security!*[System[(EventID=4660)]]",

"Security!*[System[(EventID=4656 or EventID=4661)]]",

"Security!*[System[(EventID=4670)]]"

]

}

]

}

Run

Script

Once you have a JSON file similar to the one in the previous section, you

can run the script from a PowerShell console:

.Create-DataCollectionRules.ps1 -WorkspaceId xxxx -WorkspaceResourceId xxxx -ResourceGroup MYGROUP -Kind Windows -DataCollectionRuleName WinDCR -DataSourcesFile FileExample.json -Location eastus –verbose

One thing to remember is that you can only have 10

Data Collection rules. That is

different than XPath queries inside of one DCR. If you attempt to create more than 10 DCRs, you

will get the following error message:

ERROR

VERBOSE: @{Headers=System.Object[]; Version=1.1; StatusCode=400; Method=PUT;

Content={"error":{"code":"InvalidPayload","message":"Data collection rule is invalid","details":[{"code":"InvalidProperty","message":"'Data Sources. Windows Event Logs' item count should be 10 or less. Specified list has 11 items.","target":"Properties.DataSources.WindowsEventLogs"}]}}}

Also, if you have duplicate XPath queries in one DCR,

you would get the following message:

ERROR

VERBOSE: @{Headers=System.Object[]; Version=1.1; StatusCode=400; Method=PUT;

Content={"error":{"code":"InvalidPayload","message":"Data collection rule is invalid","details":[{"code":"InvalidDataSource","message":"'X Path Queries' items must be unique (case-insensitively).

Duplicate names:

Security!*[System[(EventID=4688)]],Security!*[System[(EventID=4656)]].","target":"Properties.DataSources.WindowsEventLogs[0].XPathQueries"}]}}}

ARM Template: DeploymentScript Resource

Now that you know how to use a PowerShell script to

create DCRs directly to your Azure Sentinel instance, we can use it inside of

an ARM template and make it point to the JSON file that contains all the XPath

queries in the right format contributed by the OSSEM DM project.

This is the template I use to put it all together:

Azure-Sentinel2Go/Win10-DCR-DeploymentScript.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

What about the DCR Associations?

You still need to associate the DCR with a virtual

machine. However, we can keep doing that within the template leveraging the DCRAs Azure resource linked template inside of the main

template. Just in case you were wondering how I call the linked template from

the main template, I do it this way:

Azure-Sentinel2Go/Win10-DCR-DeploymentScript.json at master ·

OTRF/Azure-Sentinel2Go (github.com)

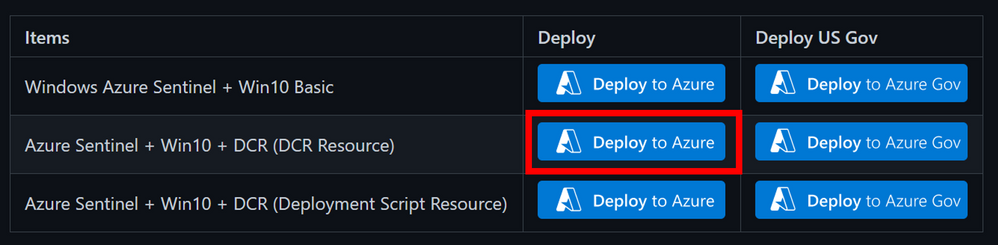

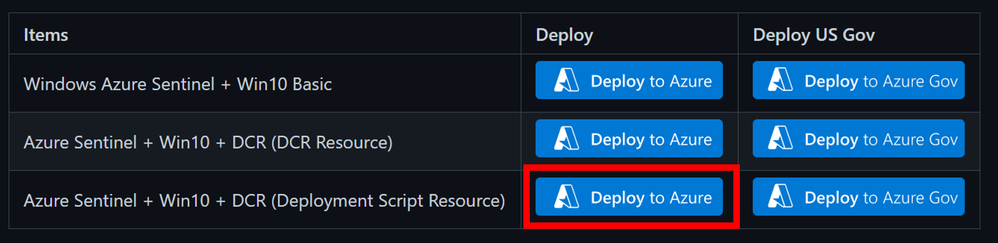

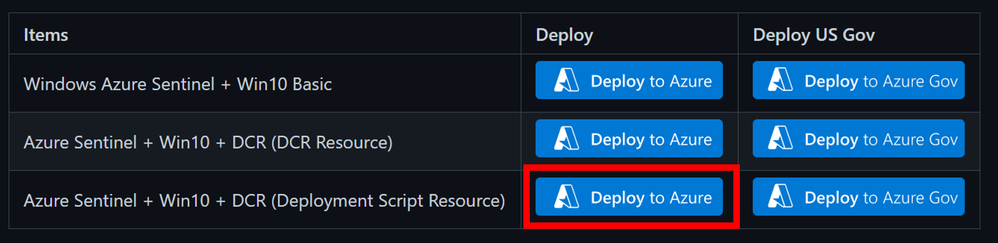

How Do I Deploy the

New Template?

The same way how we deployed the initial one. If you want the Easy Button , then simply

browse to the URL below and click on the blue button highlighted in the image

below:

Link: Azure-Sentinel2Go/grocery-list/Win10/demos at master ·

OTRF/Azure-Sentinel2Go (github.com)

Wait 5-10 mins!

Enjoy it!

That’s it! You now know two ways to deploy and

test the new data connector and Data

Collection Rules features with XPath queries capabilities. I hope this

was useful. Those were all my notes while testing and developing templates

to create a lab environment so that you could also expedite the testing process!

Feedback is greatly appreciated! Thank you to the OSSEM team and the Open Threat Research

(OTR) community for helping us operationalize the research they share with

the community! Thank you, Jose Rodriguez.

Demo Links

References